Medium-Range Weather Forecasting Updates

Discussing a weather foundation model a recently-published generative weather model.

In Medium-Range Weather Forecasting with Time- and Space-aware Deep Learning, I discussed how deep learning models are beginning to outperform traditional numerical weather forecasting (NWP) methods in terms of both accuracy and computational efficiency. This post discusses two models that have been recently introduced to the literature: Aurora and GenCast. Although these models are quite different in their structure and targeted applications, they reflect the foundation and generative modeling motifs that have come to dominate other AI application domains like text and image processing. I have updated the original review to include the discussion of these models. You can read this new discussion below. If you would like to read the original review on Substack, here is Part 1, Part 2, Part 3, and Part 4.

Atmospheric foundation modeling

NWP models are, at their core, physics simulators. Better weather forecasts are downstream of better representations of the atmosphere and its medium-range dynamics. While atmospheric dynamics are well-approximated by a collection of differential equations, inferring these physical laws from scratch in the presence of data noise and measurement uncertainty can prove quite challenging for an AI-NWP model. In addition, training AI-NWP models only on ERA5 reanalysis data runs the risk of overfitting to the subtleties of that specific reanalysis procedure and makes predicting weather-related variables which are not tracked by ERA5, like atmospheric chemistry, extremely difficult.

Following the foundation modeling trend which has come to dominate other data domains like text, networks, and images, Bodnar et al., (2024) introduce a foundation model for atmospheric condition modeling which they call Aurora. The motivation for constructing a foundation model for atmospheric dynamics is that a wide variety of climate and atmospheric processes on Earth are known to be governed by a common—though perhaps latent—collection of physical rules. If one is able to construct a model which can learn from and predict dynamics across climate and atmospheric modalities, the generalization performance of the model should improve across all modalities as its more general state representation robustly approximates these physical laws governing each data source.

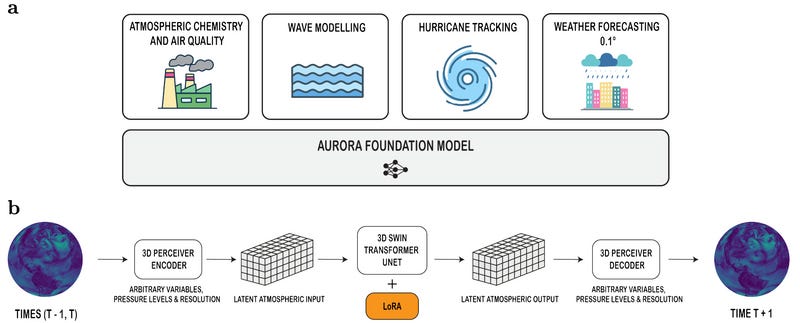

At its core, the Aurora model is similar in architecture to the FuXi and Pangu-Weather models. It is composed of a 3D Swin Transformer U-Net which takes as input atmospheric variable states across a latitude longitude grid at multiple pressure levels. It processes these inputs in two steps. It first encodes atmospheric observations into a multi-stage encoder-decoder Swin transformer model which compresses representations and simulates dynamics of these latent representations across a number of hidden layers. Its decoder layers then translate these latent weather state change predictions back onto the original Earth state mesh, providing an atmospheric state forecast.

The real technical novelty underlying the Aurora model is in its ability to pretrain, finetune, and forecast across multiple atmospheric domains using data that varies in spatial resolution and spatio-temporal coverage. This flexibility necessitates a number of architectural and training alterations, including the addition of a dynamic patch encoding and decoding, the absence of fixed positional biases, and low rank adaption for finetuning at higher resolution. While none of these architectural alterations are novel in isolation, their combination within a single AI-NWP model is an impressive feat of engineering.

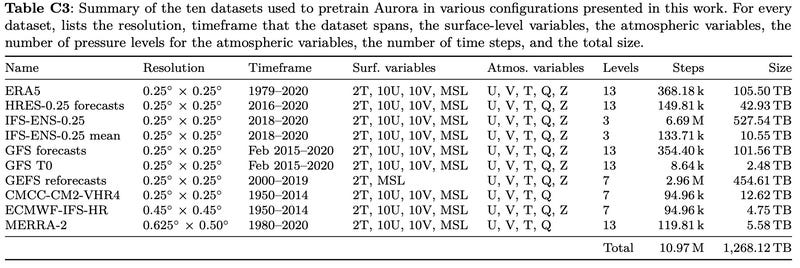

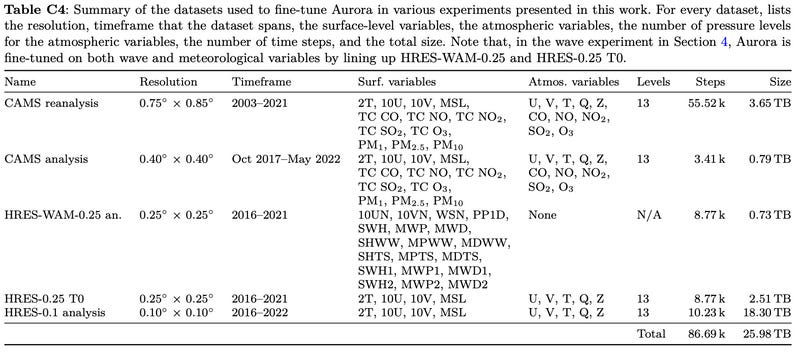

In contrast to prior work which is trained to minimize a loss function which rolls out across multiple time horizons, the Aurora foundation model is pretrained on a single-step rollout, minimizing the mean absolute error of the next state’s predicted weather. Longer forecasting horizons with the Aurora model must be introduced by finetuning the foundation model using a multi-step forecast loss and backpropagating the error through the foundation model’s pre-trained weights. This single-step state change objective constitutes the model’s pretraining task and is repeated across 10 different weather, climatological, and atmospheric chemistry datasets. This pretraining process is what categorizes Aurora as a single-horizon (6 hour) atmospheric state change foundation model.

Aurora generally outperforms IFS HRES and its peer AI-NWP models like GraphCast and Pangu-Weather at medium-range weather forecasting, but the exact reason for its outperformance isn’t clear due to interactions between the model’s complicated pretraining and finetuning requirements, its diversity of data, and its increased parameter count. The Aurora authors show that a model pretrained on all of the datasets listed in Table C3 (above) enjoys about a 3% performance advantage over the same model pretrained only on ERA5 reanalysis data—the pretraining data underlying most other AI-NWP model. Therefore, Aurora’s outperformance over competitor AI-NWP models and IFS HRES may be a result of it pretraining across a diversity of multiple data sources and atmospheric variables, representing another win for general-purpose foundation modeling approaches to predictive modeling.

However, the authors also show that increasing the number of parameters by a factor of 10 results in an expected 6% performance gain across four Aurora architectures ranging between 120 million parameters and 1.3 billion parameters. In other words, merely increasing model scale also improves its performance. One must then wonder whether scaling other AI-NWP models—for example, the GraphCast model which has only 37 million parameters—to match the billion-parameter scale of Aurora would result in performance gains for these other models as well, even in the absence of more diverse pretraining data.

Despite these lingering questions, the Aurora paper does show that the model can be finetuned to make accurate predictions about more esoteric atmospheric variable dynamics. This has obvious applications including, as the authors show, ocean wave forecasting or aerosol pollution tracking. While this foundation modeling approach is about as far removed from the interpretable, physics-informed differential equation coupling underlying traditional numerical weather forecasting, its impressive performance marks the introduction of the bitter lesson within NWP. The extent to which the outperformance of the Aurora model is great enough to warrant more widespread adoption of foundation modeling approaches to medium-range weather prediction remains to be seen.

Probabilistic forecasting

One of the major remaining deficiencies in AI-NWP models compared to traditional NWP approaches is their lack of calibrated uncertainty estimates. The AI-NWP models discussed thus far are trained to make deterministic predictions of the weather based on a mean loss to the observed atmospheric state across each historical space-time point observed during training. This training regimen forces AI-NWP models to produce weather state forecasts which average over any uncertainty present in the underlying predictions. While minimize predictive loss improves accuracy on average, it can lead to unrealistically “blurred” forecasts as the model trades sharp and realistic weather patterns for more diffuse ones that improve average accuracy. In other words, there is a precision-accuracy tradeoff inherent to the AI-NWP models we have discussed thus far. More precise weather forecasts, which are more realistic, result in a much lower average accuracy if they end up being erroneous. By contrast, although a less realistic weather forecast that is blurred towards mean patterns observed within the training data may not be able achieve optimal accuracy (because it is unrealistic), it will be more accurate on average.

This blurring behavior inherent to AI-NWP forecasts contrasts with traditional NWP models which are designed to output sharp and realistic weather state trajectories by solving a collection of physically-informed differential equations. Forecast probabilities are derived by modeling uncertainty in variable observations as part of the model input and propagating this uncertainty through the predicted weather dynamics in ensemble. Each member of the ECMWF’s ensemble (ENS) forecast is derived from the differential equations governing atmospheric dynamics and therefore represents a particular, sharp prediction of atmospheric state at a future time. Because each ensemble member is a spectrally realistic weather trajectory, aggregating over each member, initialized with samples from a distribution over present weather state, produces meaningful probabilities over potential weather trajectories.

By averaging uncertainty and forecasting the mean of probable trajectories, most AI-NWP models tend to produce unrealistic forecasts at longer time horizons that are blurry with respect to space and pressure level. This behavior is especially problematic if one wishes to extend AI-NWP methods for sub-seasonal or climatological forecasting, as these model predictions will tend towards climatological means, masking potential changes in severe weather event probabilities in the process. While the fast inference speed of AI-NWP models allows one to synthesize ensemble forecasts by generating a distribution of weather predictions based on repeated perturbation of the observational model inputs, these models’ lack of physical constraints and their blurry estimates means we typically cannot interpret the resulting forecast probabilities as equivalent to those produced by traditional NWP ensembles. This is also a situation in which the black-box nature of these AI-NWP models matters. While traditional NWP is still prone to making serious forecast errors, these errors are at least bound by a physically-meaningful set of equations which can be inspected to locate the source of errors that are typically a result of adverse initial conditions. For AI-NWP methods, however, deriving an explanation for erroneous forecasts becomes much more difficult, as the error in each forecast is a function of uncertainty in the initial conditions in conjunction with, technically, the entirety of the training dataset.

The GenCast model (Price et al. 2024) provides a machine learning approach to generating probabilistic forecasts by modeling weather trajectory distributions directly using generative diffusion techniques similar to those which underpin many of the recent advances in natural image, sound, and video generation. This diffusion approach allows one to sample an arbitrary number of sharp and realistic medium-range weather forecast trajectories and produce a distribution of trajectory outcomes instead of a single mean trajectory.

For a given dataset, diffusion models seek to learn a data transformation process that can generate new observations of the data which are distributed similarly to the dataset in aggregate. These new observations are typically created by sampling some known noise distribution which, through the learned transformation, approximates sampling from the data distribution itself. A (flow-based) diffusion model, in particular, takes an unknown or complex probability distribution (here, the distribution of future atmospheric states conditioned on recent atmospheric state observations) and progressively converts it to a known noise probability distribution (Gaussian white noise on the sphere) by building a continuous probability path connecting them. The forward direction of this process is simple to implement: add some amount of noise in each step of the process. The backwards direction, however, must be iteratively integrated using a differential equation solver. When the data distribution is complex, as is the case with atmospheric modeling, a flexible neural network model becomes a required component of the backwards solver to predict the denoised distribution (or, equivalently, the noise itself). The underlying neural network model is typically trained by inverting samples of forward steps in the diffusion process. This process results in a differential equation solver that can move backwards through this probability path and iteratively generate denoised samples from some source of random noise.

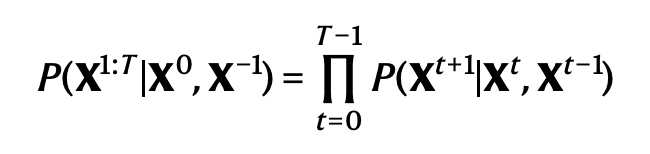

GenCast is a flow-based diffusion model which models a distribution over the next atmospheric state conditioned on two prior observed states. It creates weather trajectories by factorizing this distribution as an autoregressive product of all the conditioned distributions back to the most recent observed time period.

The diffusion process begins by drawing a sample from white noise distributed on a sphere—the Earth—that is then projected back onto the same latitude/longitude grid upon which the weather state variables are measured. A parameterized differential equation solver is then applied to this noise distribution to transform the original white noise into a distribution at a lower noise level. This process is repeated 20 times, iteratively “denoising” the original white noise weather distribution on the Earth until it resembles a plausible atmospheric state change into the next time period. Underlying the differential equation solver is a graph transformer architecture which takes as input a noise sample on an icosahedral mesh—the exact same mesh used at the finest level of the GraphCast architecture— and outputs a denoised version of the sample (equivalently, a change in the noise).

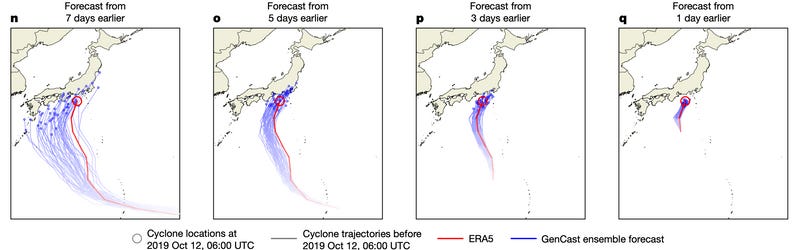

The result of this modeling process is an architecture which takes two recent weather states and a noise sample as input and outputs a particular weather state change representing a sample from the modeled state change distribution conditioned on two recent observations. This single-step state change can be fed autoregressively back into the architecture to produce a sharp weather trajectory arbitrary time steps into the future. Repeating this trajectory sampling process multiple times with multiple different noise samples results in an ensemble sampling of the probable weather trajectory distribution. GenCast generates an ensemble of stochastic 15-day global forecasts, at 12-hour steps and 0.25° latitude–longitude resolution, for more than 80 surface and atmospheric variables. This process takes approximately 8 minutes, which is slightly slower than the inference times required by non-probabilistic models but still much faster than traditional NWP approaches.

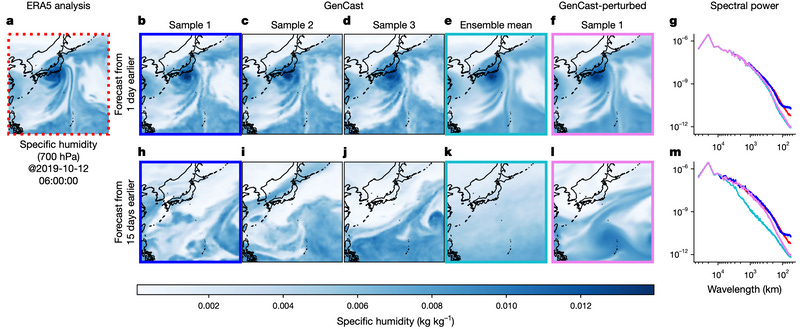

Crucially, each GenCast ensemble member trajectory is realistic and much less blurry than deterministic AI-NWP techniques. This sharpness can be observed by comparing the spherical harmonic power spectra of these member trajectories to that of the ERA5 ground truth weather patterns. As expected, the GenCast ensemble spectrum decays slowly (is detailed) for individual samples and in averages across near-term trajectories but decays more quickly (becomes blurry) when averaging across longer trajectory members.

Determining probabilistic forecast skill is slightly more nuanced than the RMSE calculations which underlie the calculation of deterministic skill, as one must calculate how well the forecast distribution matches ground truth. Price et al. compare GenCast’s forecasts to ENS’s probabilistic forecasts by measuring how well the marginal distributions of the forecasts approximate the true predictive uncertainty in the ground truth weather. GenCast generally outperforms ENS, especially at longer time ranges and higher pressure levels. Because GenCast produces probabilistic sample trajectories, comparing the model’s performance with previous AI-NWP models that produce deterministic mean forecasts is more difficult.

In some sense, deterministic AI-NWP models like GraphCast or Pangu-Weather and probabilistic AI-NWP models like GenCast are optimized for different objectives. Deterministic forecasts are useful if one would like a single, highly-optimized forecast of the weather at a particular point in the future. By contrast, probabilistic models are useful for querying the range of plausible weather outcomes at some point in the future. The ability to represent uncertainty in this way is especially useful when tracking severe weather, as one can more convincingly convey the range of plausible outcomes of a severe weather event and its associated risks to anyone potentially in its path. It will be interesting to track the interplay and competition between deterministic predictive modeling and probabilistic generative modeling within medium-range weather forecasting. If the past few years of broader developments within AI are any indication, generative models like GenCast may come to dominate in the near future, especially since their ability to generate realistic trajectory samples more closely aligns with traditional NWP methodology and reporting structures.

References

Bodnar, C., Bruinsma, W. P., Lucic, A., Stanley, M., Brandstetter, J., Garvan, P., … others. (2024). Aurora: A foundation model of the atmosphere. arXiv Preprint arXiv:2405.13063.

Price, I., Sanchez-Gonzalez, A., Alet, F., Andersson, T. R., El-Kadi, A., Masters, D., … others. (2024). Probabilistic weather forecasting with machine learning. Nature, 1–7.